For my Cognitive Science thesis during my senior year at Vassar, I decided to study the pathways of learning that allow people to transfer their knowledge from one area of learning to another. An example of this might be seen in learning to read and write. When learning to read, a general idea is that it will help you to write.

Reading and writing is a fairly straightforward example that uses two areas that are close together in their similarity. Both reading and writing use letters, numbers and symbols as a method of receiving or encoding knowledge. They share many similar superficial features.

What’s perhaps more interesting is the case of conscious transfer learning. In people, and perhaps in other animals, we are able to consciously abstract features from a domain and identify connections between two dissimilar domains. These identified connections can help us to leverage our learning from one domain in another.

To give a concrete example of conscious transfer, let’s say you learn to play chess, and one of the strategies you learn that helps you to win is to “control the center.” I haven’t played a lot of chess (so what I say next could be completely wrong), but I can take an educated guess that it means to use your pieces to control the center space of the board. Now, if we take that principle, and try applying it to another domain such as winning elections in politics, we would ask, how do we “control the center in politics”? Perhaps controlling the center of the political spectrum, so staying appealing to a moderate audience would be my guess. The point is, to “control the center” is a concept that can be abstracted from the domain of chess and applied to a situation that is completely different in its manifestation, like politics. By applying the abstract principle of controlling the center from chess to a domain like politics, the possibility of positive transfer learning effects emerge.

Near transfer is transfer learning that occurs between two similar domains. Far transfer is transfer learning that occurs between two dissimilar domains.

Near transfer is a process that can occur semi-automatically. Going back to our example of reading and writing, one can see the many shared similarities between reading and writing. There is not much abstraction that needs to be done to see how learning to read can help you to learn to write. Learning the alphabet and the spelling of words, which are necessary skills for reading will be massively helpful when trying to construct sentences.

Far transfer on the other hand is a process that may take more “conscious” thought and abstraction to find principles that apply across multiple domains. There is also still the issue of executing a concept in a new domain that may have many differences. Controlling the center in politics may involve gaining votes through campaigning while controlling the center in chess may involve jockeying for control of the center of the chess board with an opponent. There is an extra step in far transfer. One must learn an abstract principle from one domain, and then learn how to execute it in a new domain.

To study this in my thesis, I used Robot Operating Software (ROS), Gazebo a world simulator and OpenAI ROS, a ROS library that connects OpenAI and ROS.

The reason for using ROS and Gazebo was the ability to simulate embodied agents via robots in a world with realistic physics simulation.

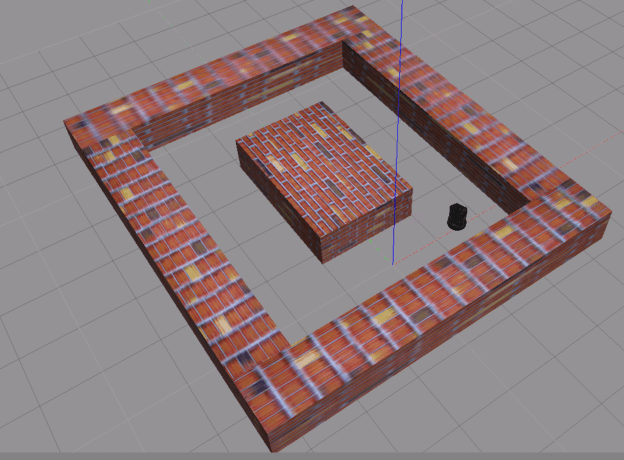

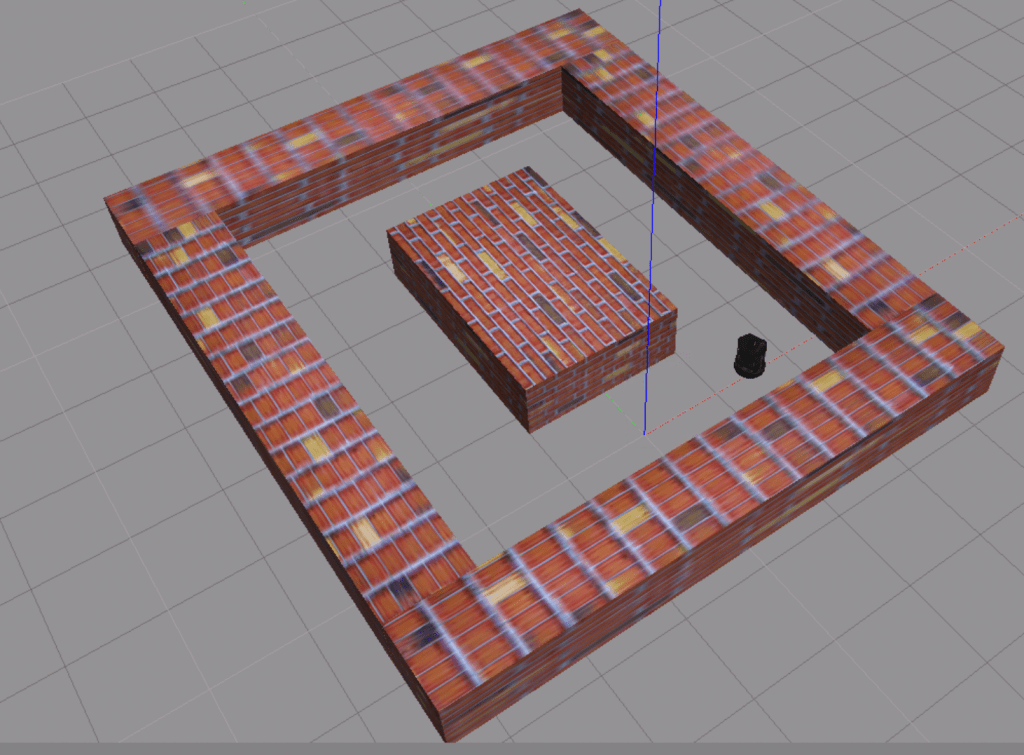

An example Gazebo world with a Turtlebot loaded in can be seen below. The Turtlebot starts at the intersection of the blue, red and green axes.

In the experiment, this brick world was the main training arena for the turtlebot. This served as the “original” domain from which the turtlebot hopefully stored knowledge of how to navigate in its connection weights. For the experiment, I used a length of 50 episodes for each “run”.

I used a neural network built with Keras to model learning in the robots. Deep q-learning was used, in which the best possible action is mapped to each state at each time step.

In the initial experiment, the neural network was very simple, with only 3 layers deep and had a linear activation. The reason for using a 3 layer network was simply due to time constraints and the computing power I had at the time. I’m working right now off an M1 MacBook Pro chip which is fairly fast but lacks a GPU to really be able to run a neural network with more layers. This might be one area of research that could let me play with the learning parameters that are used in the algorithm and test more variations of the experiment for thoroughness.

Qualitatively, the parameters of the experiment were fine. I was able to get a robot that displayed mildly intelligent behavior. For the most part it would move in a straight line and it was able to slightly learn how to turn the corner. However, if I were able to add more layers to the network, it might be able to fully navigate the maze, which is the target behavior I was looking for in starting the experiment.

One bottleneck that I faced was the fact that I had to do most of my coding contained within a virtual machine hosted on a web browser. Though it was easy to use, the website that I used to host the project was not the fastest computation.

The main reasons I chose to use the framework had a few parts.

- I relied heavily on OpenAI ROS, which is a library that was developed for an older version of ROS (Kinetic) that is not currently supported by the most recent version of Linux that is currently supported (16.04).

- I did not have a lot of time to develop my own framework.

- OpenAI ROS was the best I could do at the time to test the ideas that I wanted to test. Again the ideas I wanted to test were positive and negative transfer across learning domains using as close to real world situations as I could, which in this case was a simulation using Gazebo.

As a side note: My Professor was a big proponent of using experiments that were real world rather than simulation, which is why I might have got a B on my thesis. I originally had planned to conduct the experiment using real robots in the Cognitive Science laboratory, but I chose to instead have the experiment be able to be tested and conducted fully on my computer. I wanted to be able to work on my thesis no matter where I was and it cut down on the time I needed to develop the code. Looking back at this, I could have conducted the experiment with real robots. However, I did not think conducting it with real robots would give me much benefit compared to running the experiment in Gazebo, which is a very realistic and faithful simulator of real world physics. Many developers I believe use Gazebo to first test robots and then deploy them in the “real world”. If I can first get the behavior that I am looking for in ROS and Gazebo, then I might start thinking about extending it to real world experiments.

Regardless, from this point on I need to start thinking about how I’m going to continue this research.

P.S. I’m taking courses right now in Business Strategy and Strategic Management, and one idea that came up that I thought was analogous to the learning transfer in management is the idea of horizontal and vertical integration in organizations. It’s easier in an organization to pinpoint the areas that affect your profits, whether they are, for example, increasing your revenues or decreasing your costs, in a vertically integrated organization. However, once you get an organization with different businesses running under a single umbrella, interaction effects take place and it’s harder to say whether an increase in profits in one arm of your business is tied to that business doing well or because one of your other businesses is doing well and causing your other businesses to do better as a result. In other words, positive and negative transfer effects may be occurring across the organization and it is much more complex to track. This is similar to an idea, fragile coadaptation, that came up in research on learning transfer effects, in which the interactive effects of connections between layers in a neural network are difficult to replicate if you freeze one of the layers.

Another important nuance to include that came up during one of my lectures in Business Strategy, is that there is a certain flow to how we process information that is oversimplified in neural networks today. In the neural networks that I tested, linear activation was used which basically connects the layers in a one-way street fashion. Analogous to this are flow charts that we come up with to solve problems. One flow chart that was used by my professor in Business Strategy was to solve a marketing case.

The flow chart was thus:

- Market analysis

- Marketing Strategy Formulation

- Marketing Strategy Implementation

In a way, my thesis connects to almost any activity or skill you can learn, but the connection here was amazing to me.

According to my professor, who is a professional and has deep expert knowledge in the domain of business strategy (he has a Phd), the best way to approach teaching and learning is through this framework.

First you analyze the context of a market. In the example case we used it was the Brazilian market for laundry detergent and soap. There were two main players, Unilever and Procter & Gamble.

Then, you formulate a strategy, based on the context of the market. Formulation of a strategy in this case regarded how to increase market capture in the Northeast of Brazil, which was largely untapped and catered to without cannibalization of their own sales. Interestingly nested within this case was the idea of cannibalization. Cannibalization within the domain of business strategy is the idea that when launching a new product or looking to extend an existing product in order to enter a new market or capture more of an existing market, you risk cannibalizing your own sales. In other words, people that you are already selling to with an existing product might by this other product, and it may be that it reduces your overall profits. For businesses that have a high priority on maximizing profits, this is not a good outcome.

Cannibalization in this case reminded me heavily of the idea of negative transfer in learning. When you are trying to learn a new concept, you are developing new skills or maybe leveraging existing skills to learn in a new domain. There is the risk when you are trying to learn this new concept or skillset that you “cannibalize” your existing knowledge or learning.

Anyway, putting that idea aside, the last step is to implement the strategy. In the case that we were looking at, this consisted of finding distribution channels and optimizing coverage of the market while keeping costs low and sales high. Additionally, a key question in any business case is whether the increased profits are sustainable. This might mean keeping competitors out or trying to come up with a value add for customers that cannot be easily imitated by competitors.

Finally, getting to the most interesting point, although it’s all interesting to me, is an off-hand comment that my professor made to me when he was giving his lecture…

He said something to the effect of “This is a flow chart that we are using, but when you are applying this flow chart to a real world (business) problem, you iterate between steps constantly. Once you get to the implementation, it may change how you want to look at your analysis of the market, or it may change how you want to formulate your strategy, which then may again change your strategy (hopefully for the better). But you are constantly looping (he said iterating) between steps.”

And this is interesting to me because I just read in a book about Nunez about the way the brain works. Consciousness arises out of feedback loops between a distributed system of neural networks, and it takes about 500 ms for a thought to become conscious from its inception to its actual ability to be recognized in a self-aware manner.

I know I’m all over the place here, but if you will, this is the way in which I loop and think about things. This basically means that although the research using neural networks contended that the initial layers are the general, abstract and conscious layers, it may be the case that neural networks are missing something. MAYBE, and I put this in big letters because it’s a long shot but an idea worth considering I think, neural networks are flipped. Because the preconscious thought that leads to an eventual conscious act indicates that the initial layers which are the most abstract are not actually conscious or voluntary for us until 500 ms in. Maybe I need to do more research into exactly what Nunez means.

Or, this might be the more obvious, Occam’s Razor type answer, our language for considering and studying consciousness is bad. It mixes up terms and makes it hard for anyone across the field to precisely know exactly what anyone else is actually talking about.

It could be that maybe it simply takes time for us to be aware of an act. And regardless, my initial statement remains the same. If Nunez is correct, perhaps we need to think about flipping neural networks. Because according to current literature on learning transfer, the last layers in a neural network are the ones that are situation specific and “unconscious knowledge”. However, I could also see the other way. There is the possibility that it starts out as preconscious, but still abstract knowledge, that eventually turns into situation specific knowledge that we become able to consciously decide how to use. I think there needs to be a clarification in the literature and unification of how we think of ideas.

In business strategy, maybe because it makes so much money and therefore there is more of an incentive to be clear and practical, everyone agrees on certain things, certain terms and are consistent in the way they speak and do things. It makes it a lot easier.

I think it’s cool stuff.

This really makes me want to redo my experiment with a sparse neural network which may be able to more closely approximate our actual brain structure. I also want to be able to feed neural networks outputs to each other and in a way distribute the knowledge structure and have them able to feed back to each other and maybe have more specialization for certain tasks. For example, one neural network can be very good at abstracting in the domain of navigating, seeing and moving. And the other neural networks can be very good at implementing the general knowledge in certain situations.